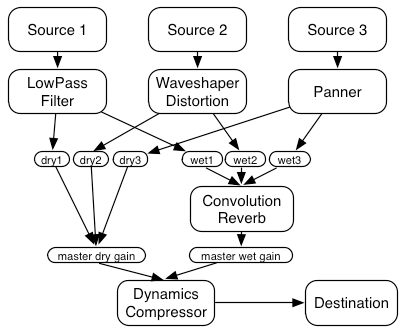

(lpf, ws, pan) <: par(3, (dry, wet)) :> (gain , creverb : gain) :> comp

function setupRoutingGraph () { // Connecta source1

context = new AudioContext(); dry1 = context.createGain();

wet1 = context.createGain();

// Create the effects nodes. source1.connect(lowpassFilter);

lowpassFilter = context.createBiquadFilter(); lowpassFilter.connect(dry1);

waveShaper = context.createWaveShaper(); lowpassFilter.connect(wet1);

panner = context.createPanner(); dry1.connect(masterDry);

compressor = context.createDynamicsCompressor(); wet1.connect(reverb);

reverb = context.createConvolver();

// Connect source2

// Create master wet and dry. dry2 = context.createGain();

masterDry = context.createGain(); wet2 = context.createGain();

masterWet = context.createGain(); source2.connect(waveShaper);

waveShaper.connect(dry2);

// Connect final compressor to final dest. waveShaper.connect(wet2);

compressor.connect(context.destination); dry2.connect(masterDry);

wet2.connect(reverb);

// Connect master dry and wet to compressor.

masterDry.connect(compressor); // Connect source3

masterWet.connect(compressor); dry3 = context.createGain();

wet3 = context.createGain();

// Connect reverb to master wet. source3.connect(panner);

reverb.connect(masterWet); panner.connect(dry3);

panner.connect(wet3);

// Create a few sources. dry3.connect(masterDry);

source1 = context.createBufferSource(); wet3.connect(reverb);

source2 = context.createBufferSource();

source3 = context.createOscillator(); // Start the sources now.

source1.start(0);

source1.buffer = manTalkingBuffer; source2.start(0);

source2.buffer = footstepsBuffer; source3.start(0);

source3.frequency.value = 440; }

AudioWorkers?

onaudioprocess= function (e) {

for (var channel=0; channel < e.inputBuffers.length; channel++) {

var inputBuffer = e.inputBuffers[channel];

var outputBuffer = e.outputBuffers[channel];

var bufferLength = inputBuffer.length;

var bitsArray = e.parameters.bits;

var frequencyReductionArray = e.parameters.frequencyReduction;

for (var i=0; i < bufferLength; i++) {

var bits = bitsArray ? bitsArray[i] : 8;

var frequencyReduction = frequencyReductionArray ? frequencyReductionArray[i] : 0.5;

var step = Math.pow(1/2, bits);

phaser += frequencyReduction;

if (phaser >= 1.0) {

phaser -= 1.0;

lastDataValue = step * Math.floor(inputBuffer[i] / step + 0.5);

}

outputBuffer[i] = lastDataValue;

}

}

};

let step = 1/2 ^ bits in

vectorize(1/fr)[1]: (/step) : (+0.5) : floor : (*step) : dup(1/fr)

Can WebAudio be Liberated from the Von Neumann Style?

Conventional programming languages are growing ever more enormous, but not stronger[...] their primitive word-at-a-time style of programming inherited from their common ancestor--the von Neumann computer, [...] their inability to effectively use powerful combining forms for building new programs from existing ones, and their lack of useful mathematical properties for reasoning about programs.

An alternative functional style of programming is founded on the use of combining forms for creating programs. Functional programs deal with structured data, [...] do not require the complex machinery of procedure declarations to become generally applicable. Combining forms can use high level programs to build still higher level ones in a style not possible in conventional languages.

from John Backus's Turing Award Lecture

Question 1

Functional Programming?

Pure, Strongly Typed Functional Programming is

Referentialy Transparent:

- No global state

- Program behaviour is fully determined by inputs

- Reasoning is local, programs closer to maths

execute : Mem → Instr → (Mem, Instr)

execute m i = ...

Compositional:

f.connect(g)

⇓

$g ∘ f ≡ f : g$

Don't connect, Compose!

$⟦f : g⟧ = ⟦f⟧ ; ⟦g⟧$

Strongly-Typed:

$⊢ f :: A → B$

"$f$ takes an $A$, returns a $B$"

$$ \frac{⊢ f :: A → B \qquad ⊢ g :: B → C}{⊢ f : g :: A → C} $$

$⊢ f :: \{ x ∈ ℕ \mid even(x) \} → ℝ$

$f(2)$ Ok! $\qquad f(3)$ Wrong!

Types are Static Specifications

checked at compile-time

programs are free of runtime errors

Question 2

Why Strongly-Typed FP?

Avoid mistakes; boring, repetitive tasks:

- Streams, buffers, synchronization, feedbacks...

- Functions: a very good abstraction!

- Correct by construction approach.

- Types include timing information!

Run fast:

Doing audio efficiently: a hard, low-level problem, how to help?

- Statically-Typed FP code: fast compiled code.

- Referential transparency enables a world of optimizations.

- Efficient code for fusion/multirate.

- Different implementation choices.

Welcome AudioShaders!

Similar to AudioWorkers, but written in a strongly-typed purely functional DSP domain-specific language.

Use streams, functions and feedbacks

l = AudioShader("(* 0.8) : feed x = .2*x in (+ x) ");

g = AudioShader("gain(.5)");

c = compose(l, g).

should be indistinguisable from:

c = AudioShader("(* 0.8) : feed x = .2*x in (+ x) : gain(.5)");

Idea obviously borrowed from OpenGL/GLSL

AudioShaders enjoy referential transparency and simple semantics, facilitating the job of the browser.

Strong Types, Strong Possibilities

What does this bring us wrt to current API?

- Reusable, inline-friendly components.

- Run at different rates.

- Manage complex "patching", including control.

- Bit-precise output vs fast-mode.

- Subsumption of existing native nodes.

- Frequency vs time domain shaders.

- Cost model for memory/time, latency guarantees.

- Formal semantics, security.

Interfacing with the JS World:

Use rich, strong types for each AudioShader.

declare-shader bitCrusher (a_rate c_rate : rate) {

fr : float {0 <= fr <= 1.0} @ c_rate;

input : int @ a_rate;

output : int @ a_rate;

}

Thanks to rate typing, AudioParams become regular streams!

Semantics/Implementation:

Compilation is a well studied problem in the synchronous programming language community.

"Clocked" programs

Local latency in the type, deterministic.

Compute the appropiate buffer sizes, erase all branching and clock information. No garbage collection.

Efficient audio poses some new challengues.

The Bad Side:

We are all afraid from Alien Technology

- Much more complexity to handle.

- YAAL.

- Transport Issues? IO?

- Will it work?

Where to Go from Here?:

Practice/Implementation:

Proof of concept using Faust already exists.

Don't miss demo tomorrow!

Theory:

In development. Small DSP core formalized in Coq, more to come.